- #HOW TO INSTALL APACHE SPARK FOR PYTHON SERIES#

- #HOW TO INSTALL APACHE SPARK FOR PYTHON DOWNLOAD#

- #HOW TO INSTALL APACHE SPARK FOR PYTHON WINDOWS#

#HOW TO INSTALL APACHE SPARK FOR PYTHON WINDOWS#

Right-click your Windows menu, select Control Panel, System and Security, and then System. Apache Spark is an open-source framework and a general-purpose cluster computing system.

Edit this file (using Wordpad or something similar) and change the error level from INFO to ERROR for log4j.rootCategory Open the the c:\spark\conf folder, and make sure “File Name Extensions” is checked in the “view” tab of Windows Explorer. If you are on a 32-bit version of Windows, you’ll need to search for a 32-bit build of winutils.exe for Hadoop.) This blog covers basic steps to install and configuration Apache Spark (a popular distributed computing framework) as a cluster.

#HOW TO INSTALL APACHE SPARK FOR PYTHON SERIES#

To connect to the EC2 instance type in and enter : ssh -i 'securitykey.pem' . Simply Install is a series of blogs covering installation instructions for simple tools related to data engineering. Connect to the AWS with SSH and follow the below steps to install Java and Scala.

#HOW TO INSTALL APACHE SPARK FOR PYTHON DOWNLOAD#

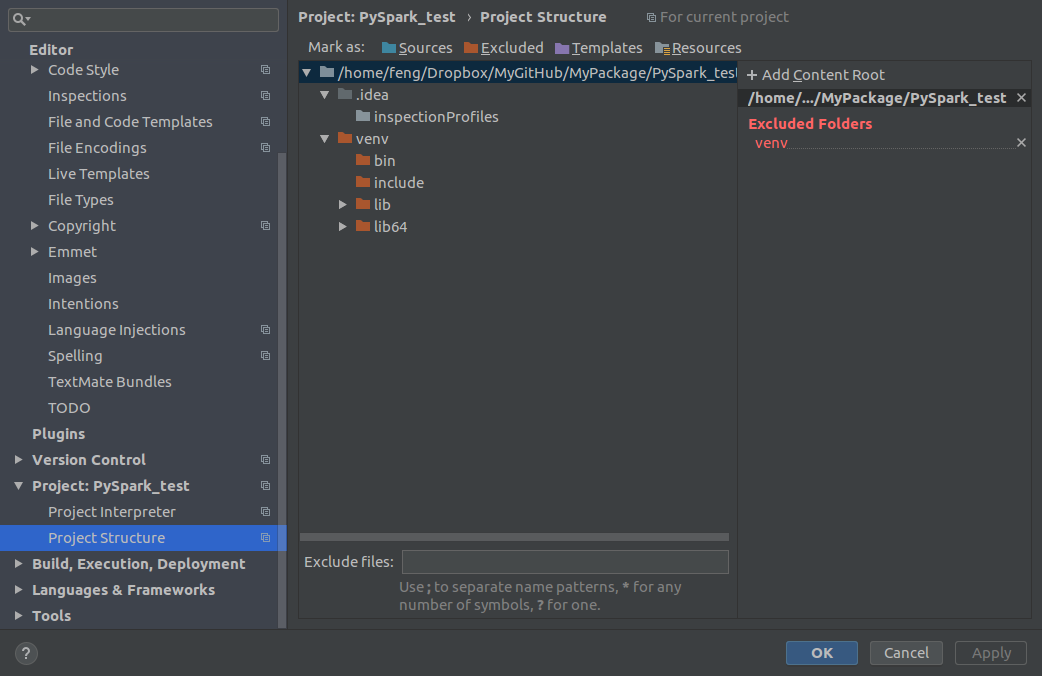

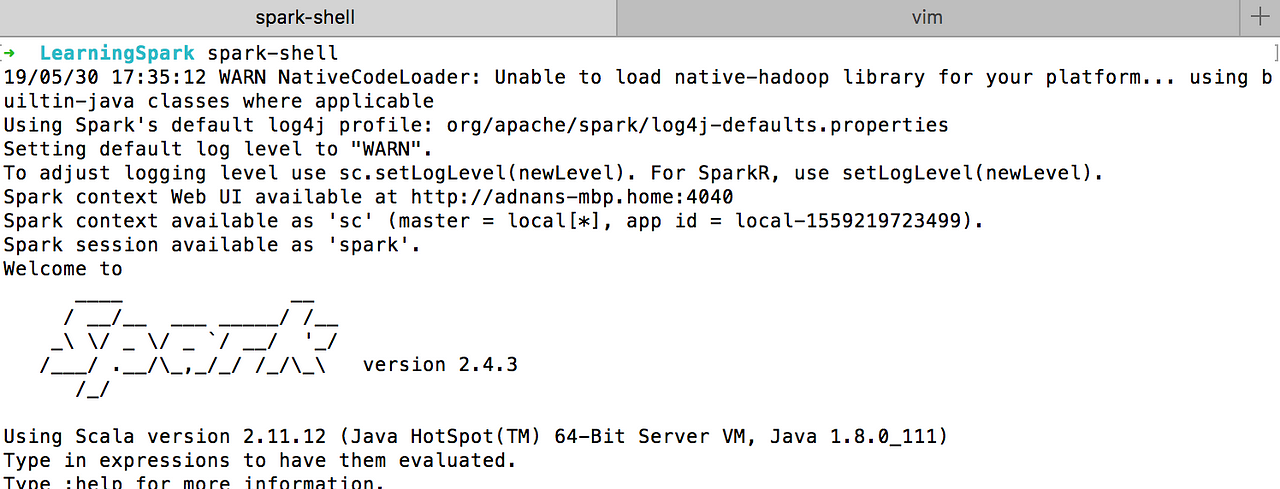

Navigate to the below link and direct download a Spark release. Now, as we move to the end, we just have to install Spark and Hadoop itself. You can understand the various forms of machine learn. Let’s install both onto our AWS instance. Now we will install a Python library that will connect Java and Scala with Python. This video on the Spark MLlib Tutorial will help you learn about Spark 's library for machine learning. Navigate to Project Structure -> Click on ‘Add Content Root’ -> Go to folder where Spark is setup -> Select python folder. You should end up with directories like c:\spark\bin, c:\spark\conf, etc.ĭownload winutils.exe from and move it into a C:\winutils\bin folder that you’ve created. To install spark we have two dependencies to take care of. Įxtract the Spark archive, and copy its contents into C:\spark after creating that directory. If the spark shell is running on your system, you can run python on this environment run the following command.

If necessary, download and install WinRAR so you can extract the. Spark is not compatible with Java 9.ĭownload a pre-built version of Apache Spark from We can install them using the following command: Copy sudo apt install default-jdk scala git -y Then, get the latest Apache Spark version, extract the content, and move it to a separate directory using the following commands. Be sure to change the default location for the installation! DO NOT INSTALL JAVA 9-INSTALL JAVA 8. You will need Java, Scala, and Git as prerequisites for installing Spark. It is designed with computational speed in mind, from machine learning to stream. You must install the JDK into a path with no spaces, for example c:\jdk. What is Apache Spark Apache Spark is a distributed open-source, general-purpose framework for clustered computing. Install a JDK (Java Development Kit) from.

0 kommentar(er)

0 kommentar(er)